Write it down, write it down, write it down.

September 8 Update: On the importance of "writing it down," + AI and education, and more.

Welcome to Young Money! If you’re new here, you can join the tens of thousands of subscribers receiving my essays each week by adding your email below.

A quick PSA: 1) several of you have sent me some very cool leads / intros of different creators / entrepreneurs to chat with, thank you I love you all. 2) I do read all of my email replies and try to respond to as many as I can, so if you have someone you think I should meet, def shoot me a note!

Write it down, write it down, write it down.

As a “non-technical” San Francisco resident (a slur used by software engineers and programmers to describe normies like me who weren’t building React apps pre-ChatGPT) during an AI boom, I think it’s important to ramp up my knowledge base on how all of these AI tools work under the hood. This would, of course, make me better at my job as an investor, but it’s also a hubris thing. It annoys me when I’m interested in a thing, or I use it a lot, and I don’t really know it works.

My method for speed-running this knowledge acquisition has increasingly been to build things using the technology that I’m interested in. Pre-AI coding assistants, this would have been difficult to do without a programming background, but now with Claude Code / Cursor / ChatGPT, you really can “just do things.”

My most recent interest was learning how to fine-tune a model. You hear about “fine-tuning” and “training” models all the time, but what does that actually mean? Like, what are you, the “fine-tuner,” actually doing? So I decided to fine-tune one of OpenAI’s models by training it on all of my travel blogs to create an chat model that can generate “Jack Raines-style travel blogs” on a whim.

The whole process only took a few hours, and after taking it live, I took another hour or two to document the whole process for future record.

I’ve always been a big proponent of “writing is thinking,” and I have always thought process documentation was important, which shouldn’t be a surprise considering that I am a writer. But I think the importance of slowing down and writing out your thoughts and processes has increased 10x now that AI tools are so prevalent.

Why?

Because knowledge acquisition used to be an automatic consequence of doing or researching anything, but thanks to AI, the end result the the knowledge formerly created by reaching the end result have become decoupled. Writing is the forcing function to ensure that you actually retain knowledge from AI-enabled projects.

Pre-ChatGPT, it was pretty difficult to reach your end result or ideal output without learning how the thing you’re trying to build, or the idea you’re trying to research, works along the way. Three years ago, if you wanted to create a simple python tool that allowed you to forward emails to a particular address and receive Spanish translations of those emails a few seconds later (I spun this up a few weeks ago), you would have had to understand the logic of the code itself and be very intentional about the software stack you used to connect all of the pieces for it to work. Your intuition was being refined the entire time you were building the thing.

Now? You can hack the whole thing together in a few hours with coding assistants without having any idea how the backend that you just built “works.” The result? You have a functional tool, but your actual “learnings” from building that tool are minimal. If you spend an hour or two after building the tool to document your process, however, you’ll retain much more knowledge of “how” you built the whole thing, which will allow you to move faster and more purposefully on future projects, and if you take the time to read through the script(s) and analyze what the code is actually doing, your intuition about coding logic will improve. Again, pre-AI, your intuition was strengthened simply by doing the work. But now that you can streamline 90% of the labor, you have to be intentional about the learning.

The same is true for anything “research-y.” Say I’m digging into the marine robotics space as diligence for a couple of investment opportunities. I could almost-certainly offload most of the cognitive load of the “research” to a Gemini deep research report, and read through a thorough, well-written, AI-generated report after. The problem is that your fully-AI-generated report will be logically sound, and while reading it will obviously inform you about your topic of interest, reading without writing won’t reveal to you all of the things you don’t know.

The process of writing about a topic makes the holes in your knowledge base immediately obvious because you’ll be stopped mid-sentence when you’re working through an idea as you encounter an information gap. Those information gaps inform the direction of your research, and you accumulate more and more knowledge as you seek to fill those information gaps. You have to do the writing yourself to retain the lion’s share of the ideas, and more importantly, to strengthen your intuition over time.

Writing is also powerful reinforcement learning: ideas stick with you better when you write them out and read them. That’s not to say that we shouldn’t use AI assistants. Claude Code is fantastic, and my ChatGPT usage is absolutely contributing to global warming. But if you aren’t retaining the knowledge related to your work, AI isn’t giving you leverage. It’s turning you into a commodity.

What I’m Working On:

Here’s the full blog post on building the Travel Blog GPT. The whole thing took a few hours; you can probably use my code + download your own content and spin up something similar if you’re curious. Links to my Github are included in the post.

I also spent part of the weekend spinning up a “niche subreddit” scraper to look for some potentially interesting leads for our Creator Fund. Again, if you, or someone you know, has built a compelling social media presence around a particular niche, and they’re building a business on top of that, I’d like to chat. I’ve met some really cool folks the last few weeks thanks to you guys.

We are launching a Slow Ventures etiquette school for founders. (I’m serious, see Sam’s tweet here). Too many founders have mastered the art of fine-tuning LLMs and raising capital from VCs, but what about soft skills like setting the table, maintaining eye contact, and how to properly mix a drink? This is not a joke. If you want to join cohort one, add your info here.

What I’m Interested In:

AI’s impact on education. AI could, and should, disrupt a lot of fields, but so far, much of the economic impact, outside of coding, has been “meh” due to slow enterprise adoption and edge case issues. That being said, I think education and “how we do school” is on the verge of being turned upside down due to a mix of factors, namely: shifts in societal expectations of school, rising costs of education, and AI’s ability to create personalized lesson plans catered to students. I met a super interesting founder building an alternative school system last week, and I also thought this Patrick O’Shaughnessy interview with Joe Liemandt on “Alpha School” was fascinating. Ed-tech is traditionally a tough market, but I do think this is one of the places where AI isn’t just a shiny toy, but a legitimate force of change.

Upstart media companies are growing up. One reason that traditional media companies have struggled in an increasingly-digital and short-form world is that their teams and headcounts no longer match the current reality. The result? Wages that haven’t kept up with inflation and constant layoff cycles. The winners of this shift were the creators who, realizing they now had the ability to create their own platforms on Substack, beehiiv, and other newsletter platforms, went independent, stayed lean (and often solo), and built six-and-seven figure profitable businesses on their own. It’s easier to build a lean business from scratch that can adapt to the new world than try to change a 100-year-old institution (though The Wall Street Journal is trying: they are hiring a “talent coach” to teach journalists how to be creators). But the “upstart” media companies are hardly upstarts anymore. A couple of examples: Emily Sundberg (founder of FeedMe / friend of the newsletter) just announced two new hires, and Paramount is looking to buy The Free Press for $100 - $200m. I’m curious to see how headcount sizes, hiring priorities, and the scopes of media roles continue to evolve as some of these “newer” media businesses continue to mature.

What I’m Reading:

The Patrick O’Shaughnessy episode with Joe Liemandt is a must-listen if you’re at all interested in AI’s impact on education or how students actually “learn.”

I was digging into the DTC / food space last week, and this conversation between Alex Hormozi and ButcherBox founder Mike Salguero on how Mike bootstrapped the business to $550m is great.

Henrik Karlsson is such a good essayist; love his recent article on the transformational power of sustained attention.

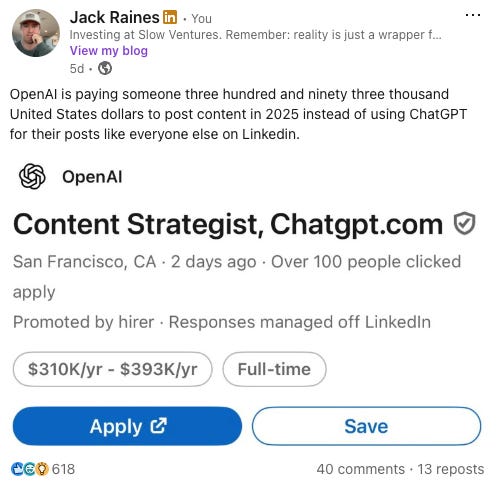

OpenAI is paying how much for a content strategist?

- Jack

I appreciate reader feedback, so if you enjoyed today’s piece, let me know with a like or comment at the bottom of this page!

the whole "writing is thinking" argument hits different when you realize we're all basically outsorcing our cognitive load to ai without building any actual muscle memory or intuition, it's like using a calculator for 2+2 and wondering why you can't do mental math anymore. your point about information gaps becoming obvious mid-senntence is spot on; that uncomfortable pause when you realize you don't actually understand what you're talking about is where the real learning happens. honestly this feels like a weird modern twist on the old marshmallow experiment (we're trading long-term knowledge retention for the immediate gratification of having a thing built quickly)

Great read! Thanks for sharing!