Financial Modeling Will Be Obsolete

Claude, Claude, Claude, and more Claude.

Welcome to Young Money! If you’re new here, you can join the tens of thousands of subscribers receiving my essays each week by adding your email below.

Apologies for the lack of writing the last week or two. Normally, weekends are for writing, but for the last two weeks I was running all over Manhattan touring apartments, ordering furniture, etc. The good news: I found a sick spot near Union Square, and that spot now has a bed, bedside table, desk, chair, unbuilt Ikea bookshelf (Ikea sent me a lot of wood planks but no screws, classic), a computer monitor, and a lot of kitchen stuff. Still very much a work in progress, but I got some writing done yesterday which was nice.

Claude does Excel

Over the summer, I spent a lot of time messing around with Claude Code and Cursor. The reasons for that were three-fold:

If you’re investing in early-stage startups, knowing the current capabilities of large language models is table stakes. Coding assistants were the most developed LLM “products” that had seen skyrocketing adoption, it would be ignorant not to test them out myself.

AI coding assistants had made simple software creation and computer data manipulation accessible to someone “nontechnical” like myself for the first time in history.

I thought there was real career risk to not knowing how to use these tools, and, similarly, real career advantages in getting up to speed quickly.

The first thing I built, in Cursor, was a rolodex that consolidated all of my contacts across Twitter, Linkedin, phone contacts, and email. I had no idea what I was doing, initially. I had never used API keys or even written a simple python script, so it took hours for me to get used to the Cursor’s IDE interface. But, after a weekend of hacking away and troubleshooting with ChatGPT, it worked. I still use that rolodex.

The second thing I built, with Claude Code, was a translation tool allowing me to forward any newsletter/blog to “translate@translatemynewsletter.com” and receive a Spanish translation of that newsletter in my inbox a few moments later.

The UX, particularly for someone who had not previously coded, wasn’t great in June 2025, especially with Claude Code, because I had to access it through my computer terminal. But it worked. And coding assistants have only continued to improve; to the point that employees at the big AI labs aren’t even “writing” code anymore. AI can just do it.

Why is AI so good at coding, and why was coding the first real “enterprise” use case that took off? At risk of grossly oversimplifying this whole thing, it’s because there were virtually unlimited troves of coding data to train models on, and code has objective “right” and “wrong” structures. Miss a parenthesis in a python script, and it won’t run. Get the structure right, it runs. Train on billions (trillions?) of examples of code, and suddenly AI can write code quite well. Humans stopped writing code in 2025. Yes, sure, it takes time for this trend to flow through the full economy, but coding assistants are the worst they’ll ever be, and they’re actually quite good.

My thought, at the time, was “What comes next?” meaning what other computer-based workflows have billions of examples of “correct” and “incorrect” scripts that can be verified formulaically, have millions (or more) of users, and subject users to tedious time-sucks as they seek to get these formulas correct. Financial modeling (and Excel use, more broadly) was the next low-hanging fruit. The real issue wasn’t technical capabilities. Microsoft’s Office Javascript API has been around for a while, Claude’s API went live in ~2023, and Anthropic added tool calling functions in 2023 and 2024, so you could have “built” this functionality a year or two ago. But the product wasn’t there yet. Coding assistants blew up so quickly because 1) there was a lot of training data and 2) a “right” and “wrong” state of code, but also because the UX just didn’t matter as much. Accessing Claude Code through the terminal wouldn’t have intimidated a software developer because… they were used to that interface. But (and this is ignoring compliance issues) no second-year JPMorgan IB associate is going to tie together the APIs needed to build an automated financial modeling tool. The tech was already there, the product wasn’t. But now? A chat window within Excel opens on the right side of the screen, and you can ask Claude to manipulate, analyze, and create anything you want directly in Excel.

A few months ago, I would go back and worth with ChatGPT on figuring out the right Excel formulas to do XYZ thing. I would upload screenshots of spreadsheets, it would give me a formula, I would paste it, it might work, and we would iterate until it was done. It “worked,” but the process was clunky. Now? The AI can just iterate directly in Excel in real time.

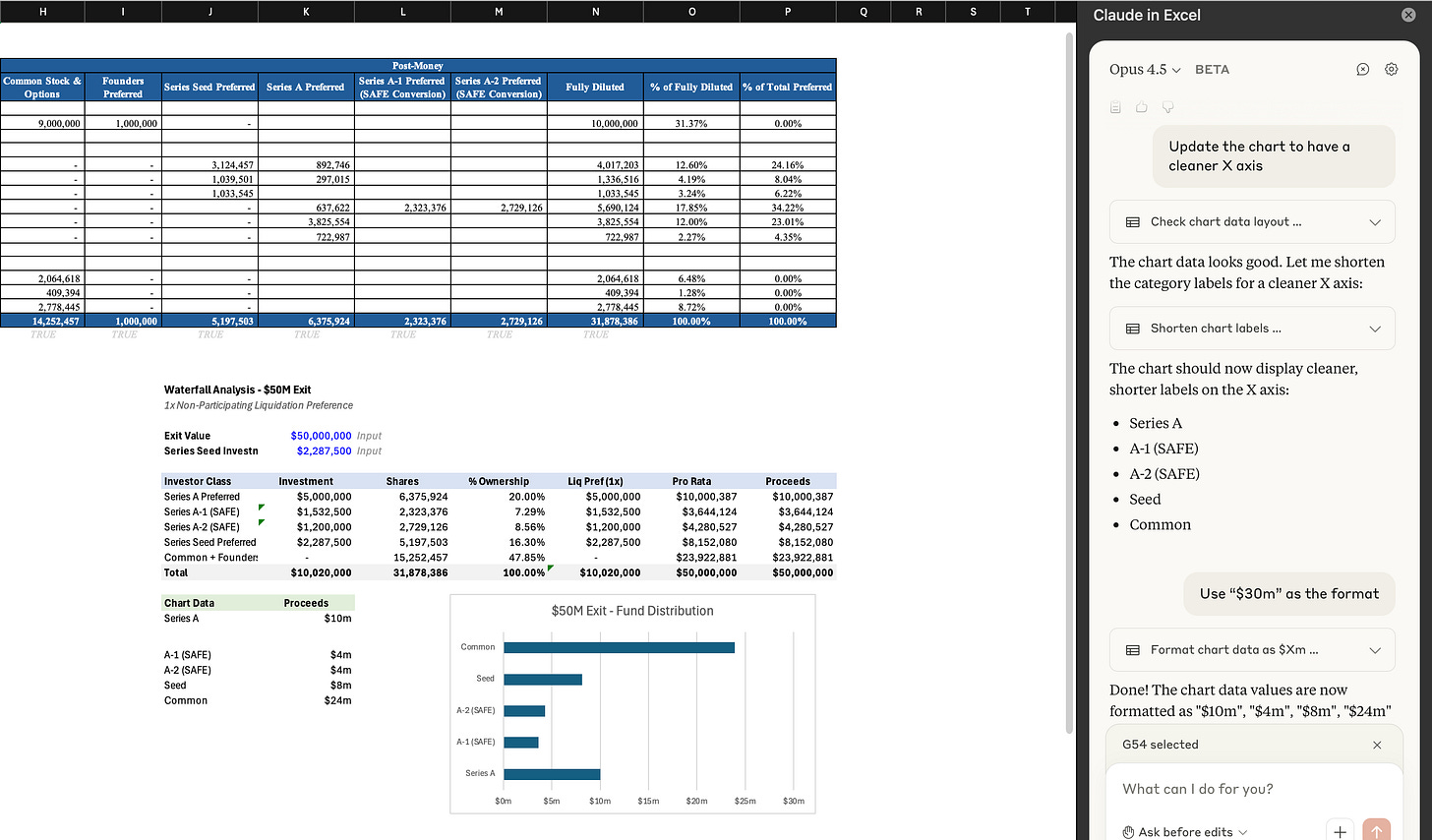

For example, I uploaded a sample Series A Pro Forma and gave Claude the following prompt:

“Create a waterfall showing how the funds will be distributed on a $50 million exit, assuming 1x non-participating liquidation preferences from all VCs. Generate your graphic in Cell J25 and work right / down from there.”

After ~10 back and forth messages to tweak formatting, remove hardcoded cells/only reference cells in the model, etc, it spit out the following waterfall analysis. The whole thing took about four minutes.

This is, obviously, a simple example, but it still cut a ~25-30 minute task down to ~4 minutes, and actually freed up more time to spot check that the right cells were referenced, inputs weren’t hard-coded, etc. Basically, you can get to a testable final product much, much faster.

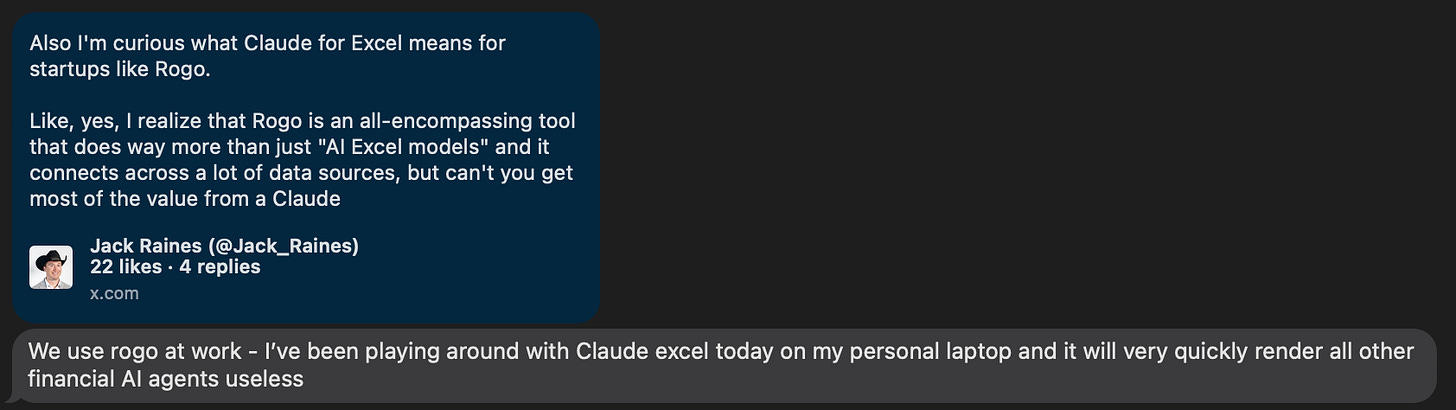

The value of “modeling skills,” at least defined as the “ability to do Excel fast,” drops to zero when you can ask AI to build a fully-fleshed out version of the thing faster than a human can type, taking out the “value” of a lot of entry-level white collar labor (IB analysts, consultants, etc) as well, at least as the work is currently done. This also, by the way, probably kills a lot of the “AI for Excel / AI for finance” startups that popped up over the last few years, particularly as Claude continues to integrate with data providers. One of my banker buddies thought the same:

My next question is what are the other “financial modeling” or “coding” pursuits that have 1) logic that must be followed to generate outputs and 2) a lot of training data to pull from? Protein synthesis? Something else? Idk. But this is only going to happen faster and faster.

What I’m Reading / Consuming

Fun thread from a Wharton professor on Claude’s Excel plug-in vs. Microsoft’s own Excel agent.

Fun blog about OffDeal, the “AI-native investment bank.” I love these stories of entrepreneurs taking an existing business model and leveraging AI to apply it to markets where it previously wasn’t possible. In this case, using AI to build an investment bank that targets small ($1-$10m EBITDA) businesses as a sell-side advisory firm.

Good clip of Tim Ferriss on Shawn Ryan’s podcast discussing audience capture and the widespread grift of “motivation” influencers who have built audiences telling people how to “do the thing” without ever doing anything meaningful themselves.

My buddy Jon Goodman’s new book, Unhinged Habits, goes live tomorrow and I’m excited to dig in. Jon is one of the more interesting individuals that I’ve met (he’s a multi-time founder and author, and he lives most of the year abroad traveling with his wife and kids). I don’t read too many “life advice-y” books, but Jon has very much “walked the walk,” so I’m pumped to check out his new work.

Tim Clissold’s Mr. China is a fantastic memoire for anyone interested in what “investing in China” actually looked like back in the 1990s when the Chinese economy started to take off. Tim partnered with a few investors to raise and deploy hundreds of millions of dollars in China on the thesis that you could mint some pretty profits by providing capital to businesses in a country poised for a GDP explosion. His account of the absurdities he encountered all over the country, from scams and grifts to just pure confusion in rural desert factories, is just excellent.

- Jack

I appreciate reader feedback, so if you enjoyed today’s piece, let me know with a like or comment at the bottom of this page!

A recent post from "Secret CFO" hits on the big questions underneath the theme of this post...

"Here’s something finance teams are going to have to figure out fast with AI…

Where the goal Is the outcome itself vs where the process is part of the goal. I.e. you wouldn’t send a robot to the gym on your behalf because the goal is not for the weight to be lifted, it’s to lift the weight.

Some tasks in finance… the objective is simply the outcome (transaction processing prime example) others the process is just important.

Ie budgeting is more valuable than the budget itself (imo).

Working out where this dividing line sits is going to determine where AI is most valuable and most dangerous.

This gets abstracted one level further when you start to think about the influence on entry level roles and developing base line skills."

Play around with Shortcut.ai or Tracelight.ai